Gen AI quality lifecycle Overview

The rise of AI is transforming knowledge bases and CMS platforms. Our team is adapting our knowledge base for AI agents, establishing end-to-end quality check points.

This initiative presented a unique challenge: navigating uncharted territory without established best practices. Our team had to forge our own path to achieve a successful outcome.

The rise of AI-powered chat has transformed customer support, making it crucial for teams to understand AI agent performance and gain actionable insights for improvement.

As we gain more understanding of issues and root causes, the team has established life cycle and main building blocks to help our business partners improve product quality overtime.

Challenges

How to fix these problems and improve the quality of generative AI? We have encountered a few challanges

Lack of actionable insights

The sheer volume of sessions and data makes it difficult to identify key areas for improvement.

HITL quality eval is not trustworthy

Quality evaluations by agents were perceived as high error bar and too slow to be useful.

No visibility into RAG

The lack of transparency in RAG makes it impossible to understand underlying issues and implement effective solutions.

Complex process for knowledge updates

The complex and time-consuming content creation process needs streamlining.

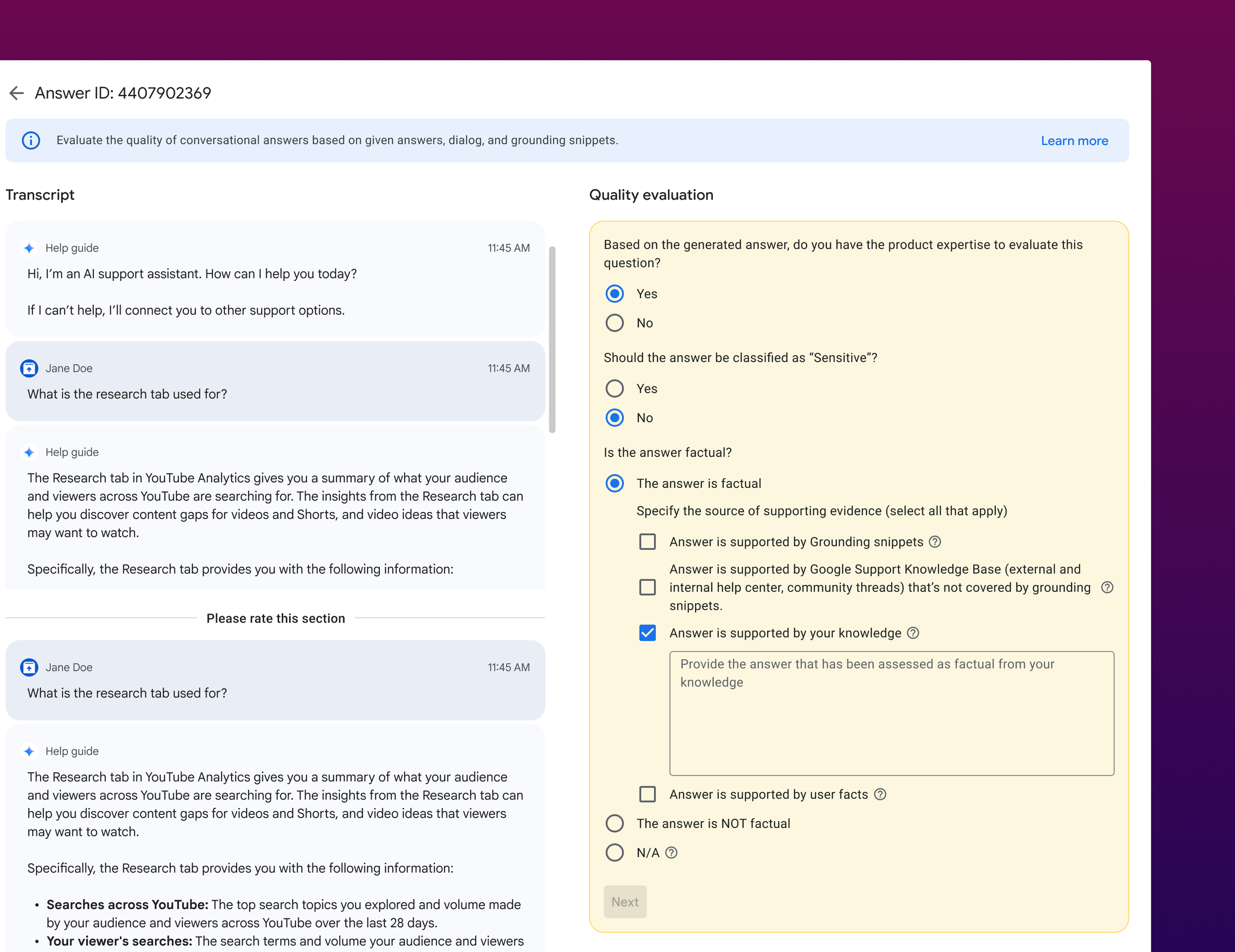

CrowdCompute

Improve accuracy and velocity of human evaluation tool for Gen AI quality assessment.

We redesigned this human quality evaluation tool UI with following considerations:

- Accuracy: Clear metric definitions, guidelines, and detailed evidence for each metric.

- Velocity: Side-by-side view and streamlined selection.

- Focus: Elimination of unnecessary survey questions, concentrating on key quality-driving metrics.

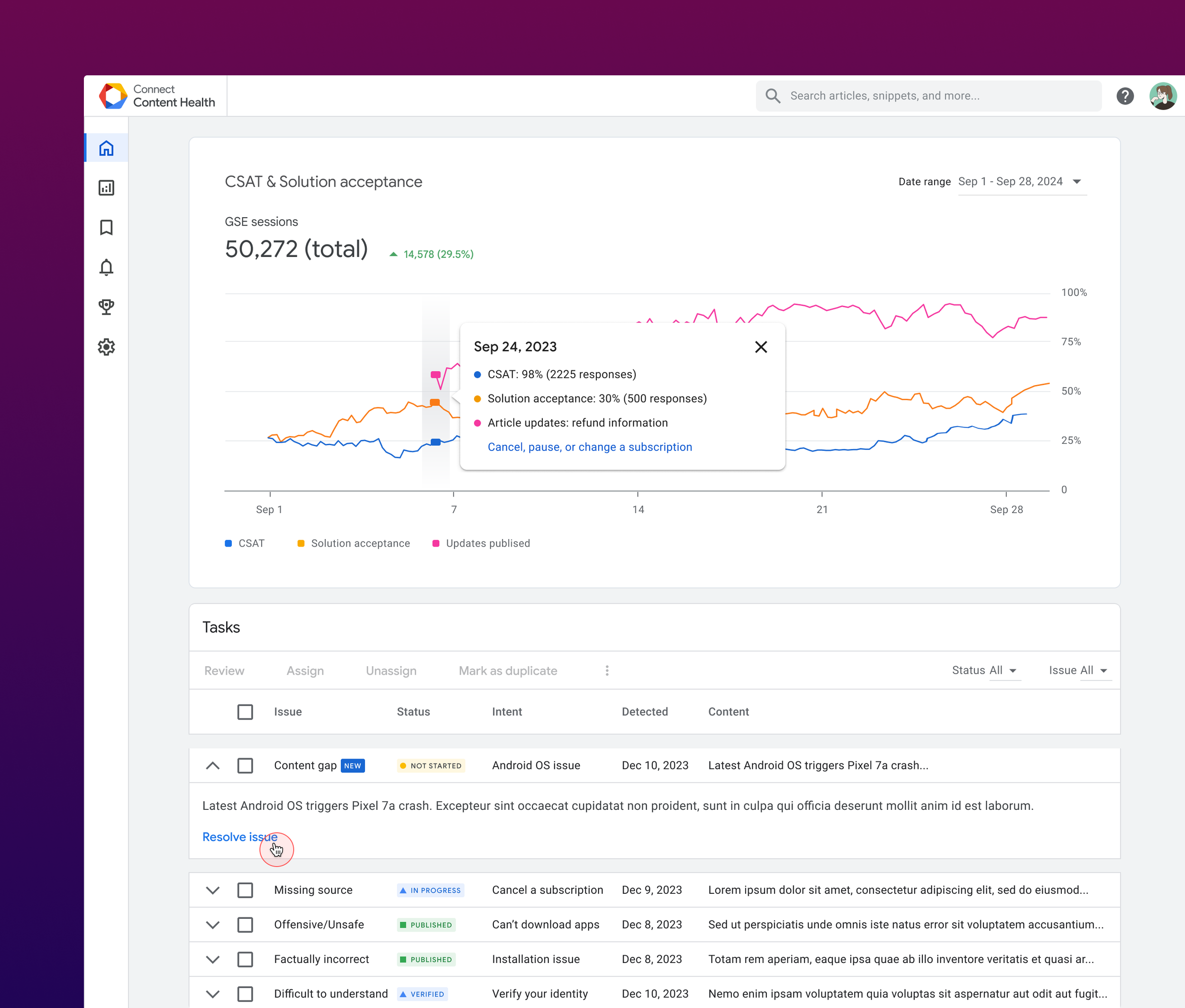

Content Health

Monitor content performance and gain actionable insights with these key features:

- Performance trend visualization on key metrics.

- Actionable insights derived from performance data, human evaluations, and autorater feedback.

- Flexible views tailored to specific business needs, including dedicated dashboards for Gen AI performance, knowledge source performance, and human evaluation results.

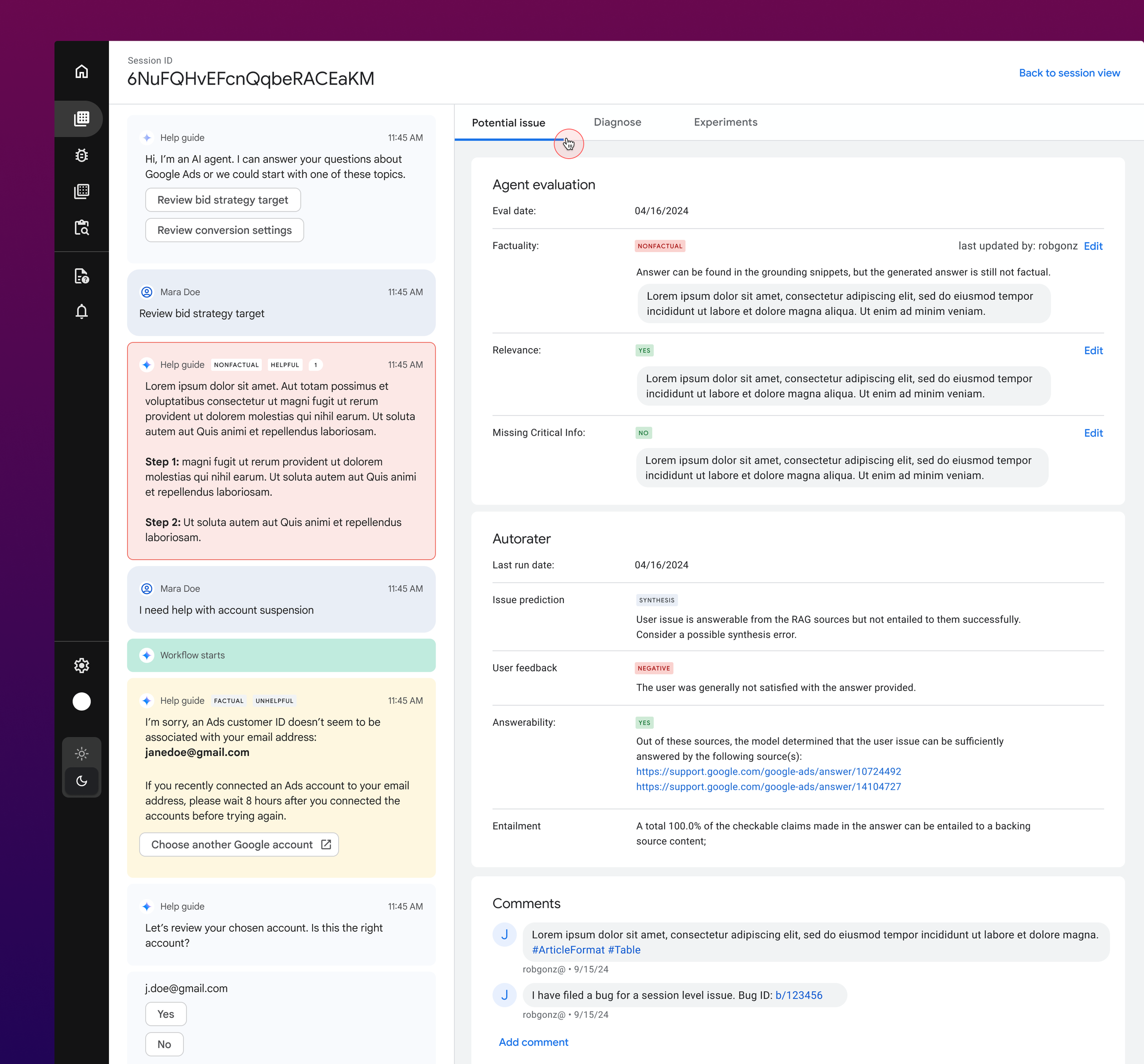

Diagnostic Console

The diagnostic console centralizes quality information, enabling root cause analysis of inaccurate generative AI responses. Key features:

- Comprehensive quality insights: View human and autorater evaluations to quickly assess response quality.

- Deep RAG diagnostics: Understand retrieved content via the Diagnose tab.

- Flexible experimentation: Test hypotheses and optimize performance by adjusting content, ranking, or retrieved knowledge in the Experiments tab.

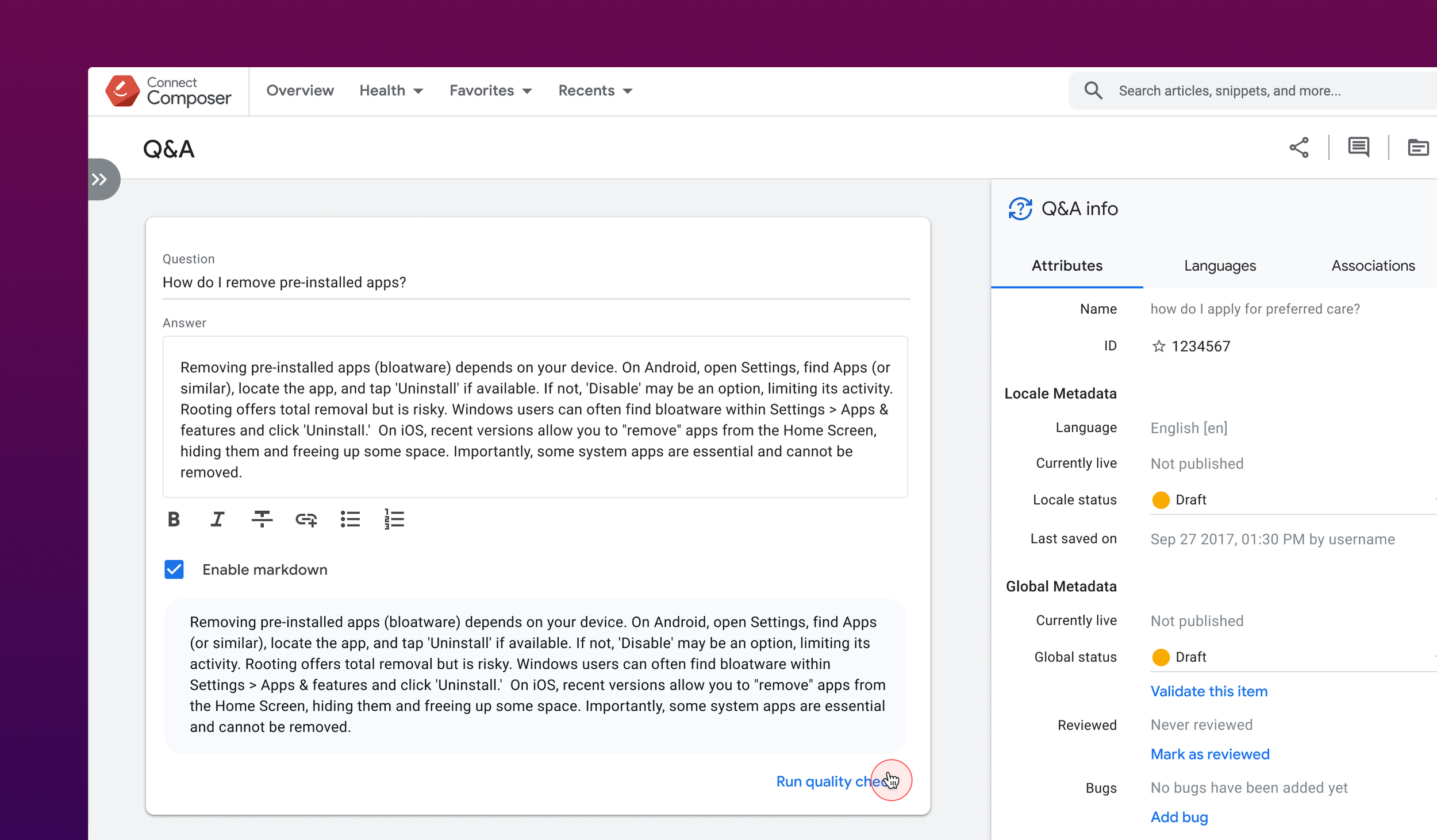

Composer

Empower your team to quickly address knowledge gaps by creating and publishing AI-only Q&A content with ease. These Q&A pairs have demonstrably improved generative answer factuality across all key products in 2024.